In a landscape where clinical assessment faces increasing demands for feedback quality, reliability, and speed, Qpercom is pioneering the use of Artificial Intelligence (AI) to meet these expectations. Our recent advancements incorporate AI into key features of our assessment platform, focusing on three major areas: station notes, AI-generated feedback using Claude 4, and advanced psychometric analysis. These innovations represent a deliberate blend of research-driven development and practical functionality to benefit medical educators, assessors, and most importantly, students. So, let’s deep dive into each of our AI features.

1. AI and Station Notes

At the heart of OSCE assessment is the quality of station design. Historically, station notes were a neglected administrative task. However, Qpercom’s AI tool now assists examiners in crafting clear, purpose-driven instructions based on learning outcomes. When prompted effectively, Claude 4 can generate structured station briefs within minutes, including:

- Station purpose linked to expected competencies.

- Descriptions of ideal and poor performance.

- Key marking criteria.

This feature supports better standardisation across assessments and improves the feedback loop by embedding clarity from the outset. Furthermore, it lowers the entry barrier for examiners new to designing assessment stations.

2. AI Feedback

Perhaps the most transformative application of AI in Qpercom is the use of Claude 4 to generate personalised student feedback. Leveraging structured data from examiner scores, global rating scales (GRS), and written comments, our AI model synthesises this input into a coherent feedback summary. Subsequently, each student receives:

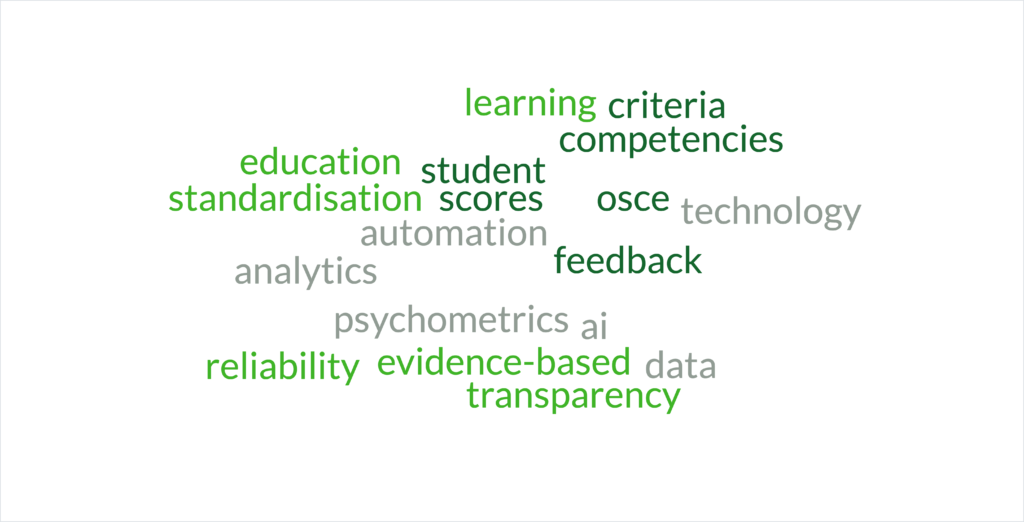

- A word cloud reflecting their performance themes.

- Three positive aspects of their performance.

- Three improvement points tailored to the assessed station.

This feedback is automatically aligned with the station’s purpose and expected outcomes, resulting in more specific, relevant insights. The aim is not to replace human judgment, but to scale the feedback process without losing pedagogical value.

3. AI Advanced Analysis

As well as our feedback innovations, Qpercom continues to invest in evidence-based analysis tools which include:

- Borderline Regression Methods (Forecast 1 & 2).

- Standard Error of Measurement (SEM).

- Global Rating Consistency Metrics.

These tools empower institutions to make informed, transparent pass/fail decisions while meeting regulatory standards. More importantly, they also support internal validation processes and quality assurance reviews.

Research Meets Reality

Our AI innovations are rooted in both scientific research and practical deployment. Internally, we conduct experimental evaluations of AI-generated feedback using real OSCE data, in collaboration with psychometric experts. Externally, we offer these features as optional tools for clients, designed to complement (not replace) existing feedback processes.

Join Our Validation Research Network

We’re calling on medical schools and assessment leads to participate in this next phase of innovation. Whether you’re looking to pilot AI feedback in OSCEs, experiment with new station design workflows, or contribute to international validation studies, we welcome your collaboration.

Together, we can ensure that AI-enhanced feedback not only saves time but supports real learning, fairness, and evidence-based decision-making in clinical education.

Stay tuned for upcoming workshops and collaborative publication opportunities. Reach out to our team to learn how your institution can get involved.

Happy holidays from all of us at Qpercom. We look forward to working with you in 2026!