“In the aviation industry, if anything goes wrong, procedures are adjusted globally. If in medical education something goes wrong, students pass or fail without appropriate reason. For assessment of clinical skills in healthcare, international standards are Qpercom’s first priority.”

– Dr Thomas Kropmans, CEO, Qpercom

Qpercom was awarded a 3rd place ranking for ‘Best International Collaboration Project” at The Education Awards 2021.

The placing was awarded for our work in 2020 with NUI Galway and seven other universities on a mutual assessment strategy for time constrained practical examinations, known as OSCEs (Objective Structured Clinical Examinations).

The Project

Initiator and co-founder CEO of Qpercom; Dr Thomas Kropmans is senior lecturer in Medical Informatics & Medical Education at NUI Galway and invited 20 EU universities to take part in a collaboration in 2019 – 2020 purposing a mutual assessment strategy for time constrained practical examinations known as Objective Structured Clinical Examinations (OSCE). Finally, after sensitive negotiations, 8 Universities joined the Irish Higher Education initiative. A mix of academic and non-academic co-authors signed up for the project with participants from the 1College of Medicine, Nursing & Health Sciences; School of Medicine National University of Ireland, Galway, Ireland; the 2Department of Clinical Medicine, Faculty of Medicine, University of Bergen, Bergen, Norway; the 3Faculty of Veterinary Medicine; Utrecht University, the Netherlands; the 4Faculty of Medicine & Health Sciences; Norwegian University of Science and Technology, Trondheim, Norway; the 5Faculty of Medicine, Umeå University, Umeå, Sweden; 6St George’s, University of London, School of Medicine, London, England; the 7KU Leuven, Faculty of Medicine, Leuven, Belgium and the 8 School of Pharmacy, University College Cork, Cork, Ireland; and finally 9Qpercom Ltd with a research internship. Collaborators were Eirik Søfteland MD, PhD2; Anneke Wijnalda3 ; Marie Thoresen MD MMSc4; Magnus Hultin PhD5; Rosemary Geoghegan PhD1; Angela Marie Kubacki6; Nick Stassens7; Suzanne McCarthy PhD8 and research intern Stephen Hynes MBBS1, 9. Assessment data available in Qpercom’s advanced assessment solution was used for international comparison with the purpose of setting an international standard in clinical skills assessment.

“Imagine if on a flight abroad, the first officer and pilot of the Boeing 737 that you’re on, announces, after welcoming passengers on board, that he recently crashed in 5 out of 10 flight training scenarios. However, he then proudly claims that he landed smoothly in the other 5. Would you feel comfortable on a flight with this person in charge?”

Performance

Up until 2000, OSCEs were mostly administered on paper, a laborious and costly method of exam delivery and one, which restricted the use of data being captured for research purposes. In 2012, Dr Thomas Kropmans, inaugurally published a paper about a multi-institution web-based assessment management information system retrieving, storing and analysing station-based assessment data. This online approach saves 70% of administration time with a 30% error reduction compared to the previous paper-based process and allowed instant Quality Assurance analysis (QA) at the School of Medicine of the National University of Ireland in Galway.

To our knowledge, cross-institutional collaboration on quality assurance in assessment has never been done before in medicine, veterinary medicine and pharmacy. By establishing a European (EU) assessment strategy, this is now possible due to the more widespread adoption of digital assessment methods. Our main purpose of this collaboration was to compare quality assurance (QA) data from penultimate clinical skills assessment across European higher education institutions.

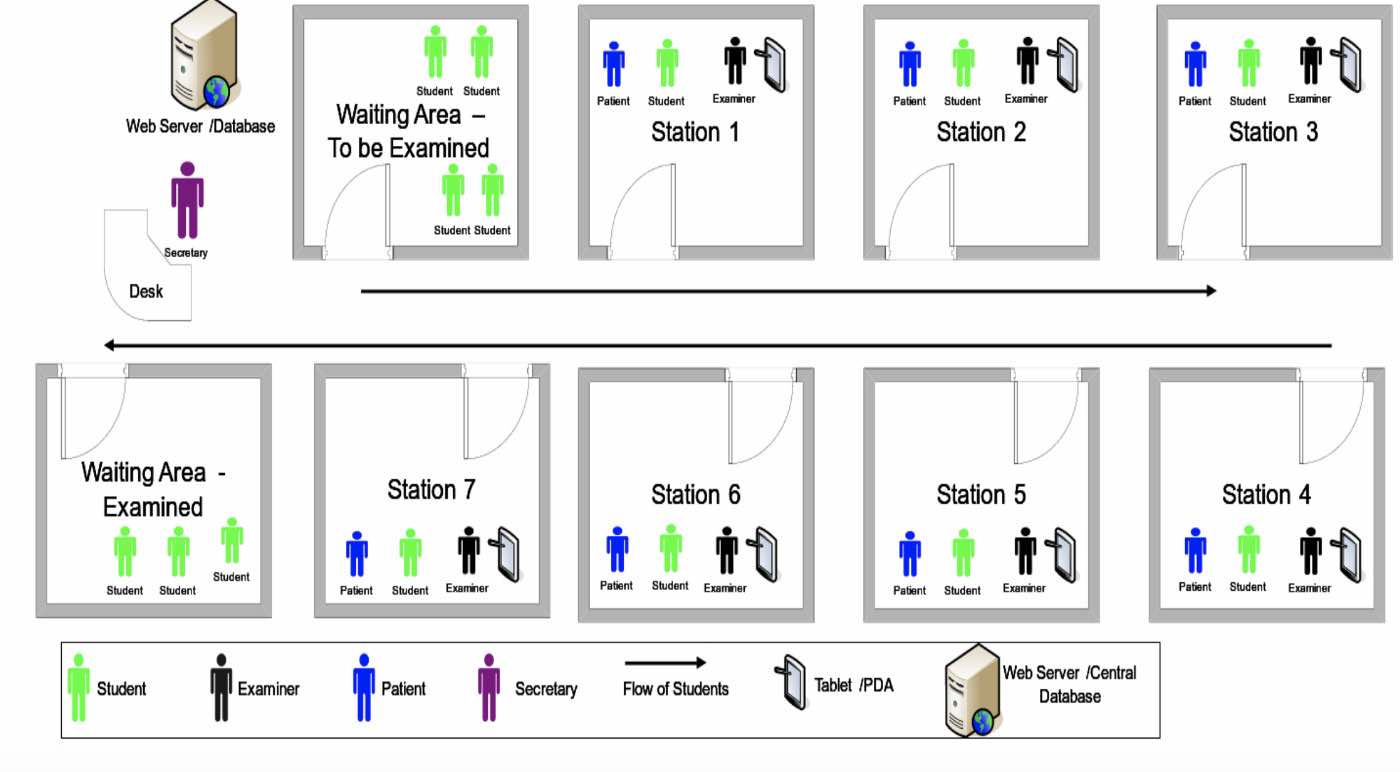

Figure 1 Qpercom advanced assessment platform allowing students to go through a consecutive series of stations. Assessment logistics and data are retrieved, stored and analysed online. It’s an award winning, ISO27001 approved international (video) software solution.

During a station-based assessment, also known as an Objective Structured Practical Examination (OSPE) or a Clinical Examination (OSCE; pronounced OSKEE), students go through a consecutive series of stations performing a time constrained clinical task or scenario in each of the stations. In each station, an examiner observes each student and marks the student’s performance on a detailed item scoresheet or a more global rating scoresheet. When finished marking the assessment scoresheet in each station, the examiner completes a 3 to 5 item Global Rating Scale (GRS) providing his/her professional judgment as either fail, borderline to pass, good or excellent based on her/his professional opinion of the performance observed.

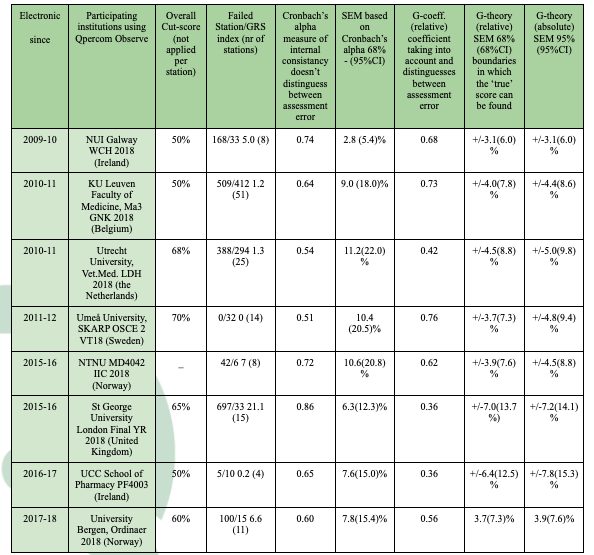

Cut-off scores differences between universities are noticeable, varying from 50% to 70% between institutions in the West, Center and North of Europe. Different rules apply between universities where the number of stations to be passed is applied. NUI Galway applies a 50% rule, meaning if you pass 50% of the stations with an average mark of 50%, you’ll pass the exam. Whereas, Umeå University in Sweden, in addition to an overall cut-score, applies the rule where each failed station is considered as being a ‘red-flag’, with two red-flags meaning you failed the OSCE. Students in St George’s University of London must achieve the overall numerical pass mark (65%) and pass at least 65% of the stations.

Pass and fail decisions or valid and reliable assessment depends on many variables:

- The assessment procedure

- The assessment scoresheet

- The examiner as a subject matter expert (or not) and their level of assessment experience

- Divergence between stations, examiners and learning/assessment objectives

- Last but not least; the student’s level of expertise/competence (knowledge, skills and attitude)

Qpercom’s advanced assessment solution retrieves, stores and analyses assessment data electronically for all of their clients. Qpercom’s assessment dashboard, similar to the pilot’s cockpit, provides insight into the quality of all abovementioned bullet points. If you analyse assessment output, all aspects involved in the assessment procedure cause some form of error. This (measurement) error effects whether a student should pass or fail and whether they progress to the next level of training or ‘crash’, to draw a parallel with the aviation industry. Similarly, the institution and their examiners might ‘crash’ if their assessment procedure is not valid or reliable.

Most participating universities don’t apply the Standard Error of Measurement (SEM) in their decision to pass or fail students after the assessment is taken. But Hays (2008) addresses that the SEM is a useful tool for making confident and defensible decisions about how to manage candidates with examination scores at or below the borderline pass mark. As long as attention is paid to established examination design principles, the SEM should be considered in any examination. This EU-wide initial collaboration showed that when SEM and confidence intervals were applied to the observed raw scores, specifically those calculated using the more modern G-Theory analysis (relative or absolute SEM) compared to the widely-used Classical test Theory, seemed to be a more accurate way than any other measure of reliability. Furthermore, the SEM and the 68% and 95% Confidence Intervals around the actual observed raw scores are expressed in the same measurement unit as the observed (examination) score itself. This is exactly what Qpercom offers to clients and what distinguishes them from their established competitors like Pearson View, ExamSoft and Blackboard.

Collaborations

Despite a large amount of research being available about QA of observational assessments, our collaboration between universities resulted in a unique and diverse outcome. Measures of QA differ as much as names of assessment stations, number of participants, number of stations and/or scenarios and learning outcomes also differed. The Classical Test Theory’s Cronbach’s alpha as a QA measure, for most universities, overestimates reliability compared to the G-theory analysis. The G-theory relative SEM and 68% (1 SEM) and 95% CI (2 SEM) could well be added to existing classical QA measures. Raising the pass bar for criterion-based assessment and decreasing the amount of false positive decisions on students receiving a pass in a clinical skills exam, will improve the quality of higher education output.

Additionally, we noticed a discrepancy between the assessment scores of the examiners based on the assessment scoresheet items and the overall Global Rating Score. The assessment (observed) score is based on the scores on individual items being observed (or not) by the examiner.

Table 1 (published with permission). Cross-institutional comparison of Cronbach’s Alpha and 1 and 2 SEM and GT coefficient and 1 and 2 SEM (2017-2018). Cut-scores in percentages, Failed station/Global Rating Scale index and (number of stations); Cronbach’s alpha; Standard Error of Measurement (SEM) based on alpha with 68% (1*SEM) and 95% Confidence Interval (CI) (1.96*SEM); relative G-coefficient generalizable to other assessments with Criterion-Referenced SEM (relative) and Norm-Referenced SEM (absolute)(1).. Umeå University use a combination of a cut score and the Global Rating Scale (2 Fail = fail overall). NTNU don’t use an overall cut-score and refer to the computed pass mark per station (at least 6 out 8 stations need to be passed), respectively.

Whereas the Global Rating Score (GRS) is supposed to reflect the professional opinion of the examiner addressed in either a Fail, Borderline, Pass, Good or Excellent performance. This discrepancy is reflected in the Failed station/GRS index. The higher the index is, the more discrepancy between observed scores and professional judgment, the perfect index would be 1.

Back in the cockpit, this means that the actual height measured on the altimeter, doesn’t correspond with the pilot’s impression of speed, height and distance (situational awareness) while approaching the airport. Qpercom is currently analyzing the written feedback being provided by examiners, to see which of the measures actually prevents inappropriate decision-making in clinical skills assessments.

We hope this EU collaboration will open borders and that more universities are willing to share QA of their clinical skills and other assessments.

Challenges

Despite the fact that only 8 out of 20 Universities were willing to collaborate due course of the pandemic, we found major differences in educational decision-making processes. Only a few universities applied the Standard Error of Measured (SEM) for a reliable decision about who should pass and who should fail. Qpercom decided to embed the SEM in their software to enhance this decision-making process. Furthermore, the political process to bring all universities together took about 2 years prior to writing up the publication. Qpercom, as the data processor, is in principle not responsible for the QA within the Data Controllers. This is the responsibility of the clients. Nevertheless, retrieving, storing and analyzing these data processes created a shared responsibility as the data processor to inform the data controllers.

So far, together we only provided a State of the Art platform as a first step. Because the approach is new and unique in its kind, we see it as a great opportunity to apply for this prestigious collaboration award as an encouragement to make the next step.

About Qpercom

Qpercom Ltd, delivers Quality, Performance and Competence measures. Established in 2008, Qpercom spun out of NUI Galway’s School of Medicine in December 2008. We provide online global advanced assessment services including assessment data analysis. Prof Timothy O’Brien, Dean of the College of Medicine, Nursing and Health Sciences, NUI Galway, Director of CCMI and REMEDIA said in 2020: ‘As Qpercom’s home base, we benefit from Qpercom’s collaboration with about 25 top ranked Universities worldwide.”

“As a medical consultant and one of NUIG’s senior examiners of the final year OSCE, we are very pleased with Qpercom. We administer exam performance electronically and can analyse results extensively, and provide instant feedback to students. Compared to the paper-based approach 10 years ago, Qpercom brought the OSCE into the twenty first century with their e-solution. It is an excellent examination tool, intuitive and user friendly. As Qpercom’s home base we benefit from Qpercom’s collaboration with about 25 top ranked Universities worldwide.”

Prof Timothy O’Brien, Dean of the College of Medicine, Nursing and Health Sciences, NUI Galway, Director of CCMI and REMEDI